I spent ‘some’ time exploring this web sign-in thing and thought to share the results of my research.

History

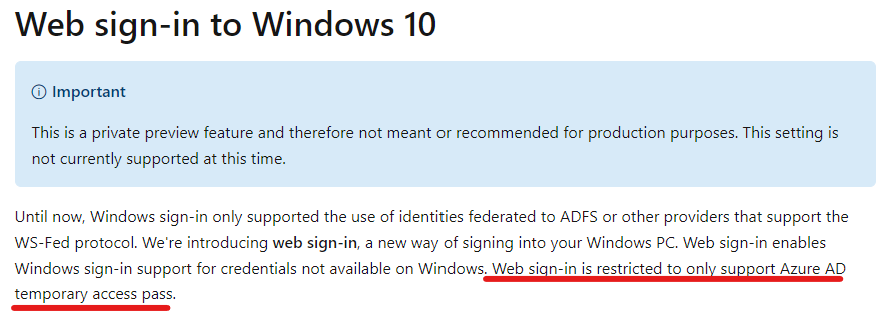

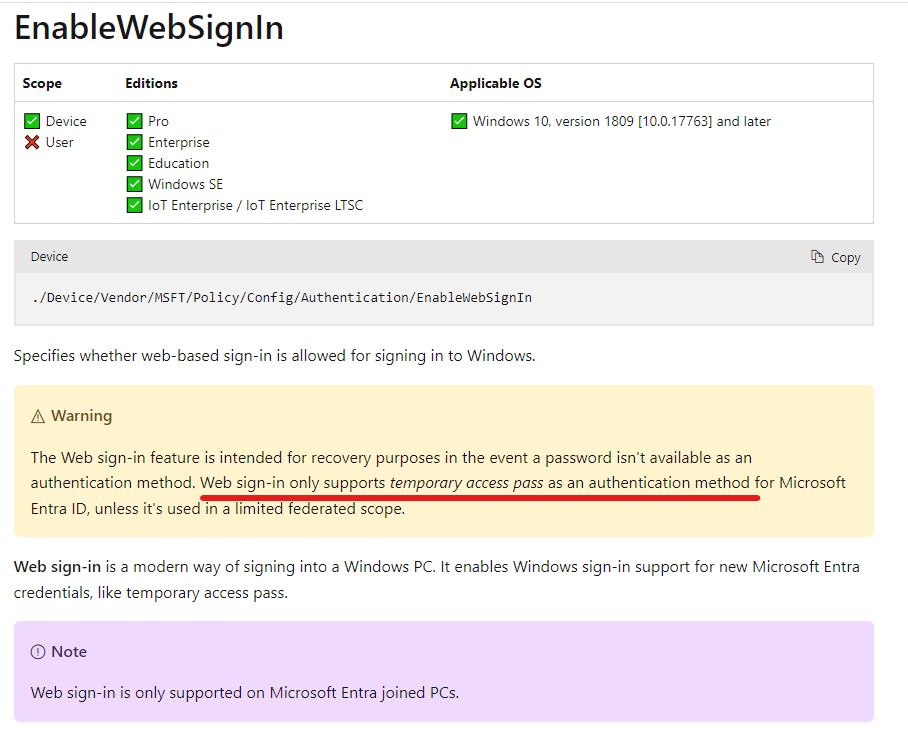

Web sign-in is available since Windows 10 1809 (link) as a private preview feature and it was restricted to TAP (temporary access pass)

There was a time when Web sign-in was not limited to TAP, so you could log in using your username + password or passwordless in the popup window (reference). But since this was a preview feature it was not recommended to use it in production.

Present

According to this documentation Web sign-in is available since Windows 11, 22H2 with KB5030310 (my experience is a bit different). According to the Authentication policy CSP documentation, Web sign-in is restricted to TAP-only:

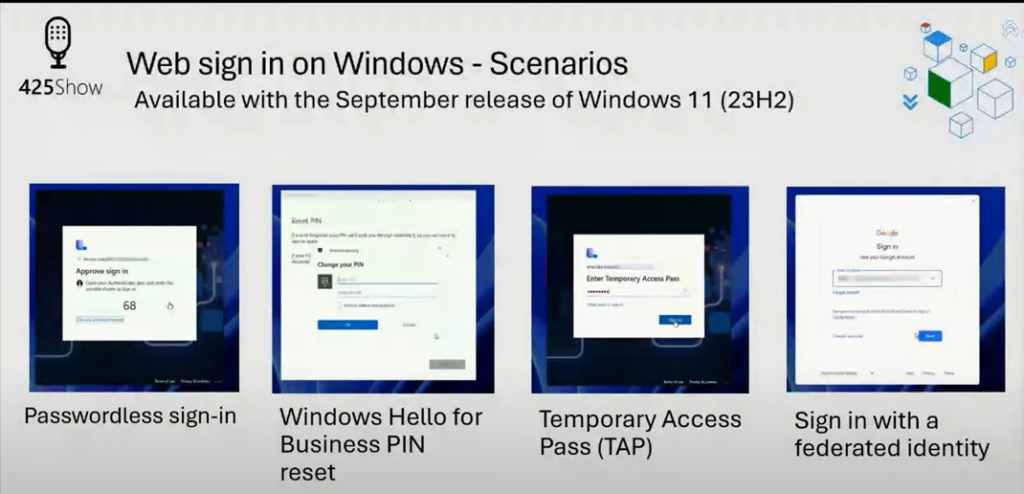

In the ‘October 2023 – What’s New in Microsoft Entra…’ video from The Microsoft 425Show (link) the option to use Web sign-in using a federated identity or passwordless sign-in is a feature of Windows 11 23H2 (expected to be released on November 14, 2023.)

While writing this article, I installed the KB5031455 update on a test machine which enabled Web sign-in that is not restricted to TAP-only.

Anyways, I guess at some point in time this will be available for everybody, so let’s see what an IT admin should know about this new way to sign in.

Basics

- Web sign-in is only available on AzureAD (Entra) joined devices, Hybrid joined devices can’t benefit from it

- Internet connectivity is required as the authentication is done over the Internet

- Web sign-in is a credential provider (like the password, PIN or smartcard provider you see on the login screen), authentication provider is still the AAD Token Issuer

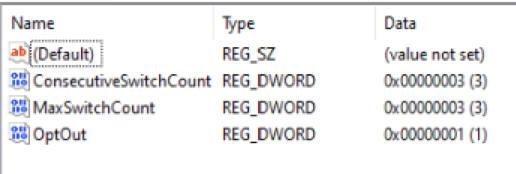

- {C5D7540A-CD51-453B-B22B-05305BA03F07} – Cloud Experience Credential Provider

- Credential Providers can be found in registry here:

Computer\HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows\CurrentVersion\Authentication\Credential Providers

- When using passwordless option in Web sign-in, the Primary Refresh Token will get the MFA claim since it is a multi-factor authentication mechanism.*

- Experience: Web sign-in also allows you to use username+password login – but in this case the MFA claim is not present (so when accessing a resource to which a Conditional Access policy requires MFA, then the users will be prompted). This is because “Windows 10 or newer maintain a partitioned list of PRTs for each credential. So, there’s a PRT for each of Windows Hello for Business, password, or smartcard. This partitioning ensures that MFA claims are isolated based on the credential used, and not mixed up during token requests.” (link). So this is not an option to bypass MFA 🙂

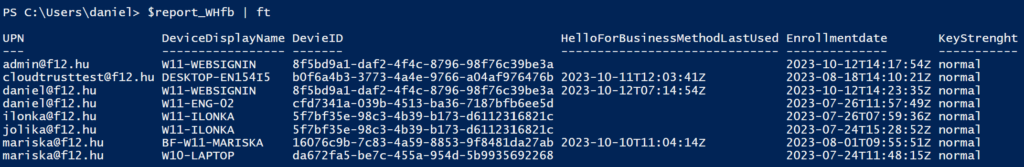

- In my opinion, web sign-in is not intended to be the primary authentication method for a regular use device (one device-one user). This can be used as part of the passwordless journey (before Windows Hello for Business enrollment) and/or on shared devices (where WHfB is not an option but you want to provide a passwordless solution).

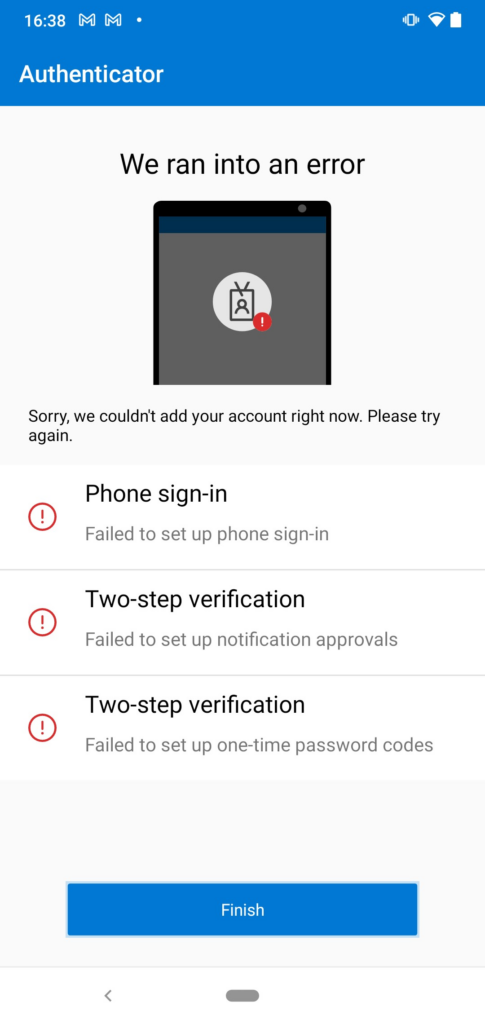

* I was wondering if ‘passwordless’ is truly an MFA mechanism, because ‘passwordless’ is not neccessarily MFA by design. It is just one factor in the authentication process (‘something you own’ = the device you use to authenticate) – but in the case of ‘Microsoft Authenticator passwordless’ the second factor (biometric or PIN) is enforced by the application (link). If you remove the PIN code and/or the biometric data on the device which is already registered for passwordless, you break your passwordless registration:

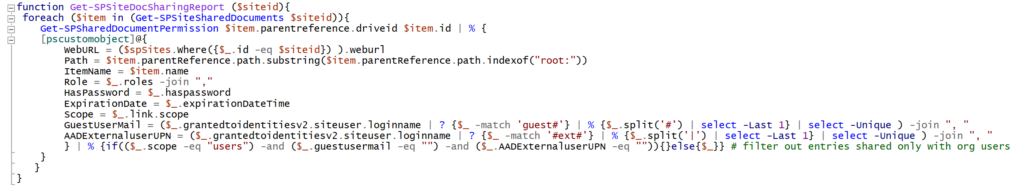

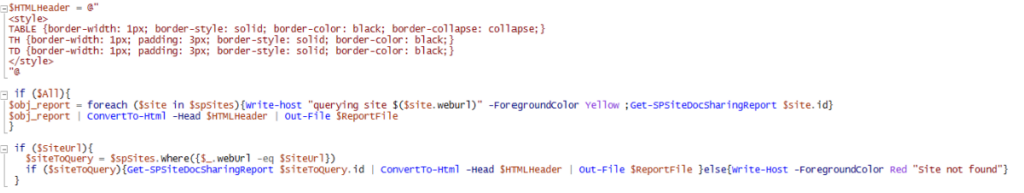

Others than Basics

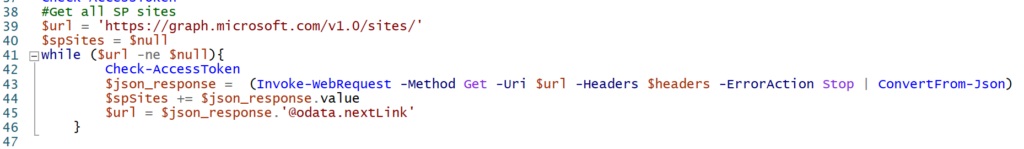

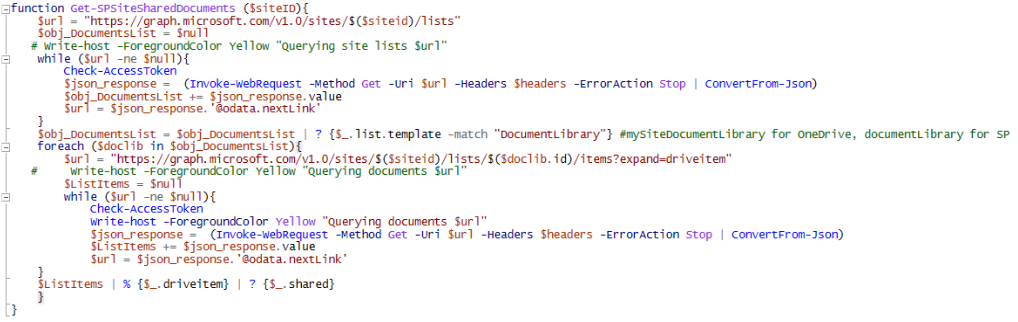

As per the documentation, you can turn it on using Intune or a provisioning package… what is not in the documentation (at least explicitly) is that it can be turned on using the MDM WMI Bridge provider (back in those days I used it a lot for AlwaysOn VPN deployment via SCCM).

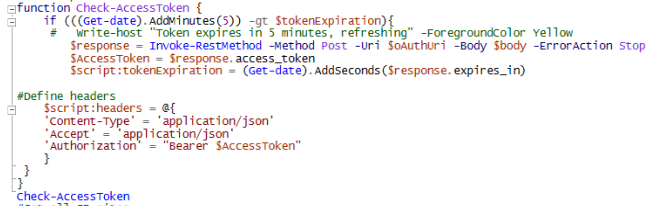

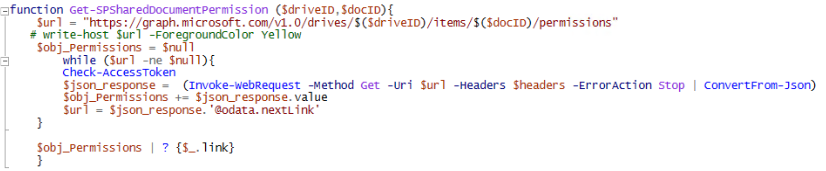

Enable web sign-in via PowerShell

$config_Classname = “MDM_Policy_Config01_Authentication02”

$namespaceName = “root\cimv2\mdm\dmmap”

#Modify web-signIn

$newInstance = New-Object Microsoft.Management.Infrastructure.CimInstance $config_className, $namespaceName

$property = [Microsoft.Management.Infrastructure.CimProperty]::Create("ParentID", "./Vendor/MSFT/Policy/Config", 'String', 'Key')

$newInstance.CimInstanceProperties.Add($property)

$property = [Microsoft.Management.Infrastructure.CimProperty]::Create("InstanceID", "Authentication", 'String', 'Key')

$newInstance.CimInstanceProperties.Add($property)

$property = [Microsoft.Management.Infrastructure.CimProperty]::Create("EnableWebSignIn", "1", 'SInt32', 'Property') #set to 0 to turn it off

$newInstance.CimInstanceProperties.Add($property)

$session = New-CimSession

if (Get-WmiObject -Class $config_Classname -Namespace $namespaceName){

$session.ModifyInstance($namespaceName, $newInstance)

}else{

$session.CreateInstance($namespaceName, $newInstance)

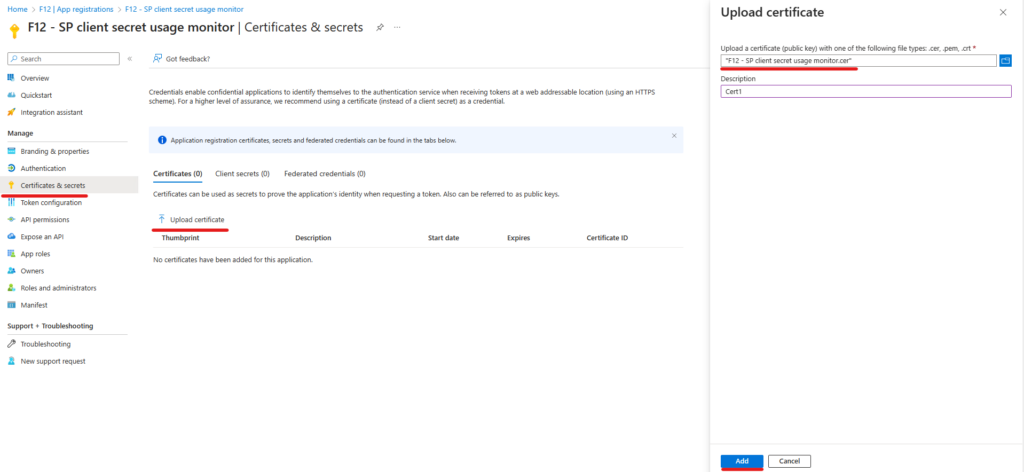

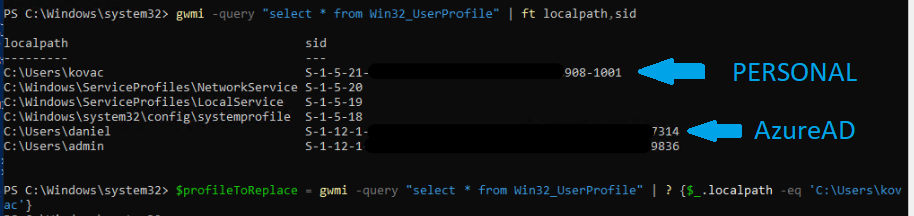

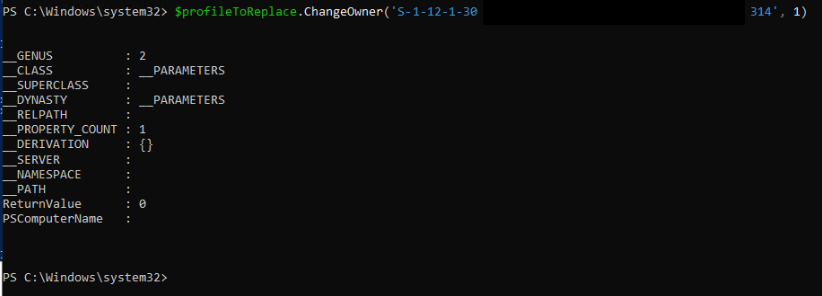

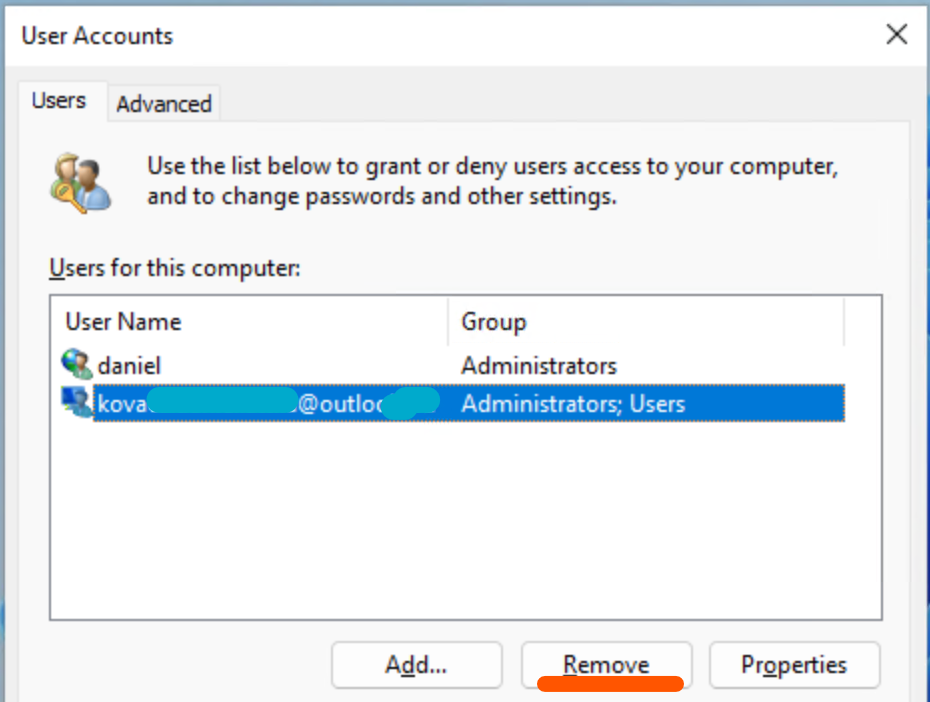

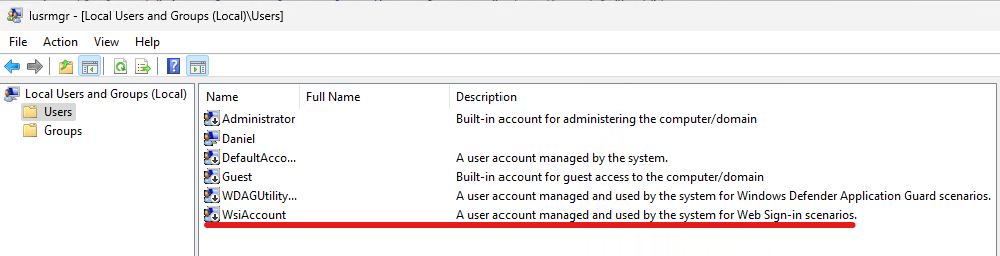

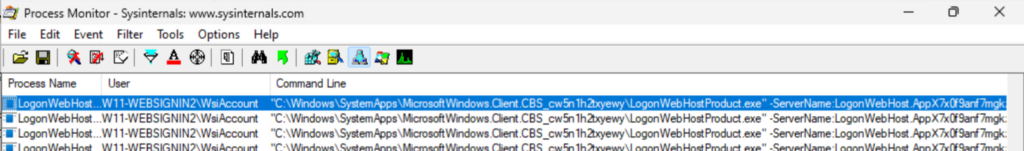

}The process is run under a system managed user account ‘WsiAccount’ which can be seen in the Local users and group console:

The command that is providing the sign-in experience:

“C:\Windows\SystemApps\MicrosoftWindows.Client.CBS_cw5n1h2txyewy\LogonWebHostProduct.exe” -ServerName:LogonWebHost.AppX7x0f9anf7mgkz8zh6haqj4eed5q0jcn1.mca

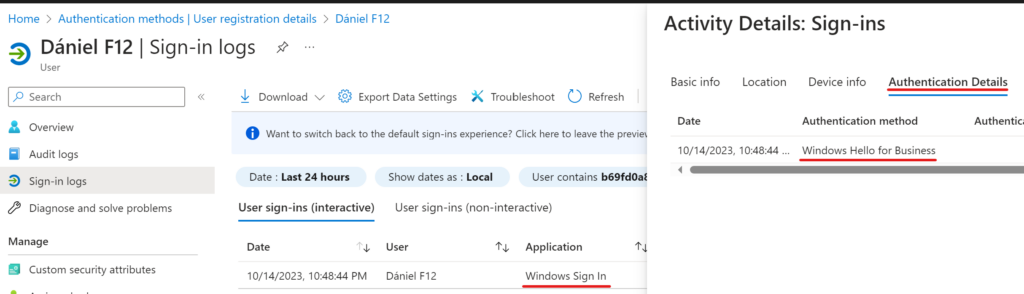

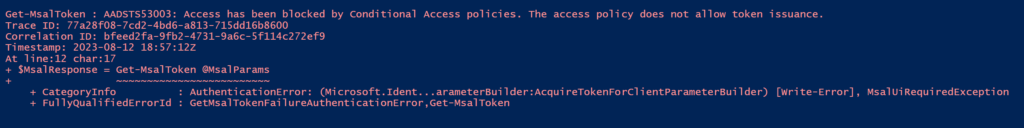

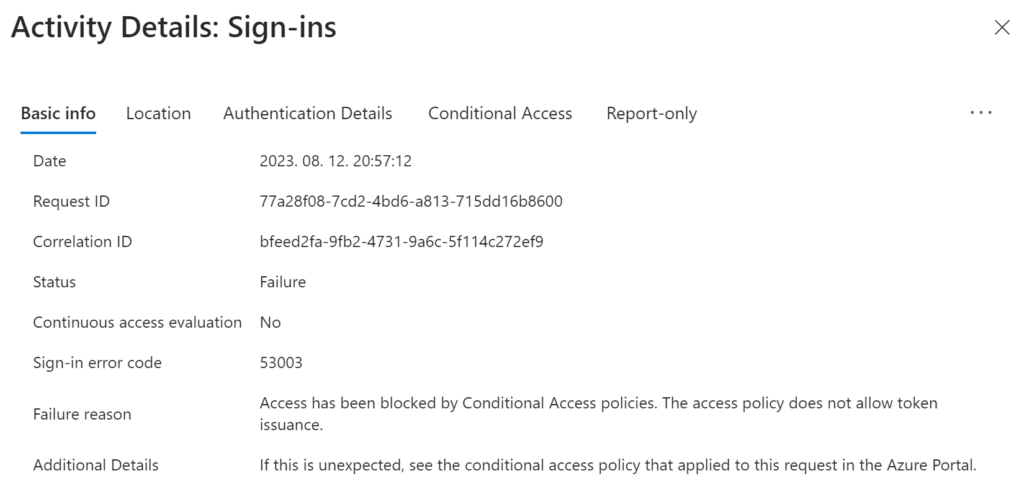

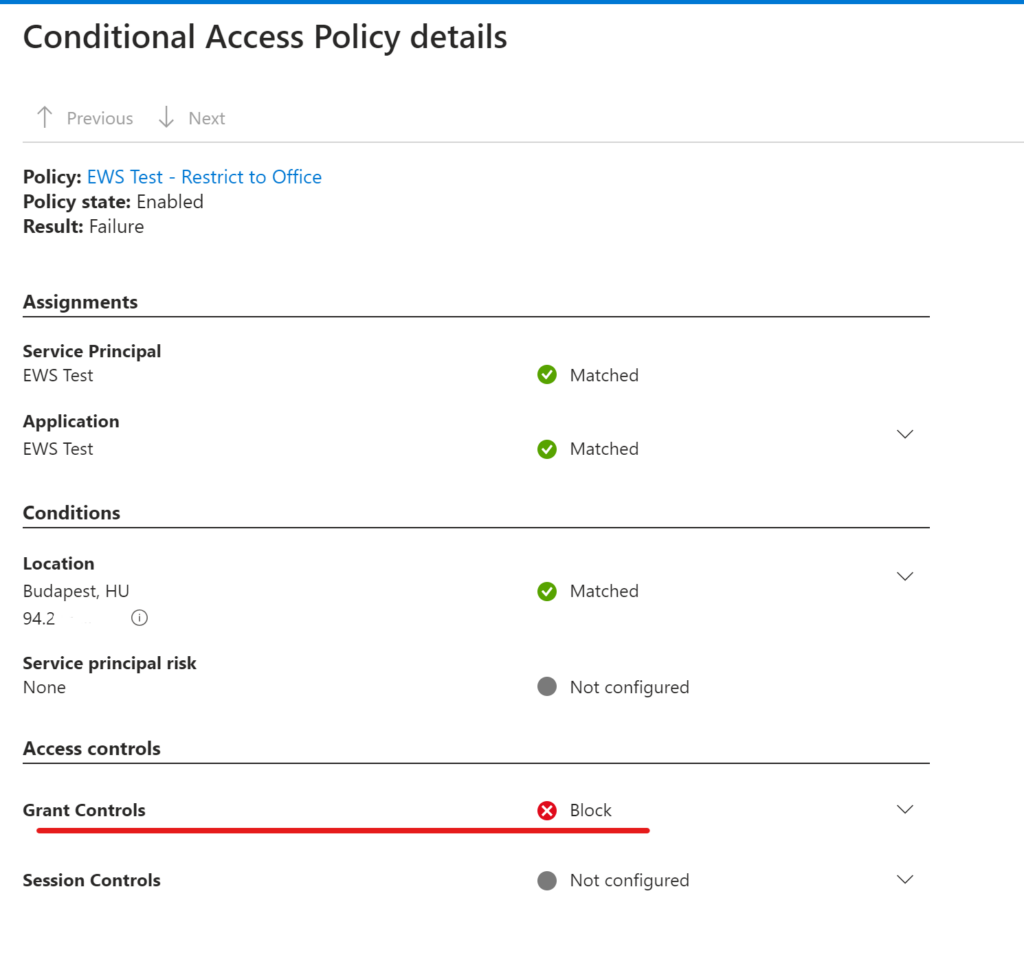

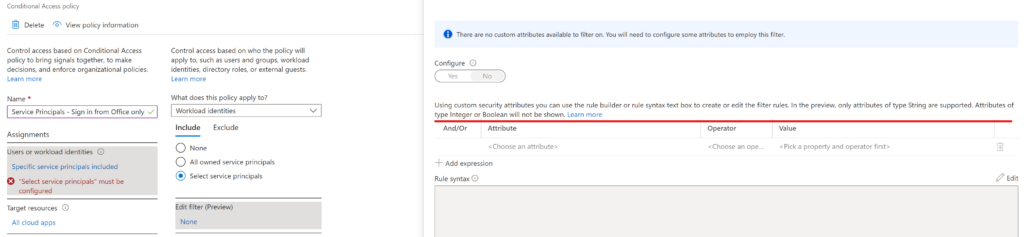

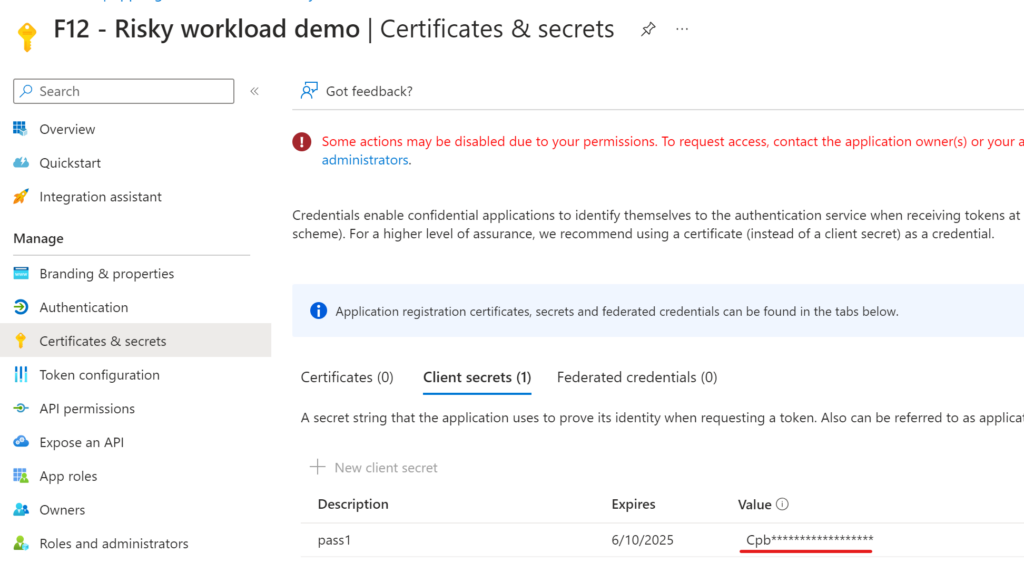

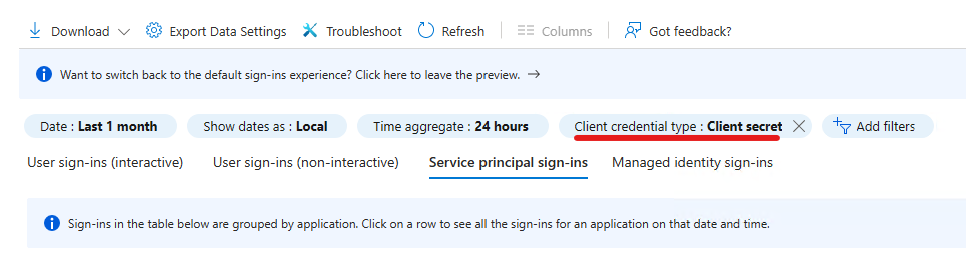

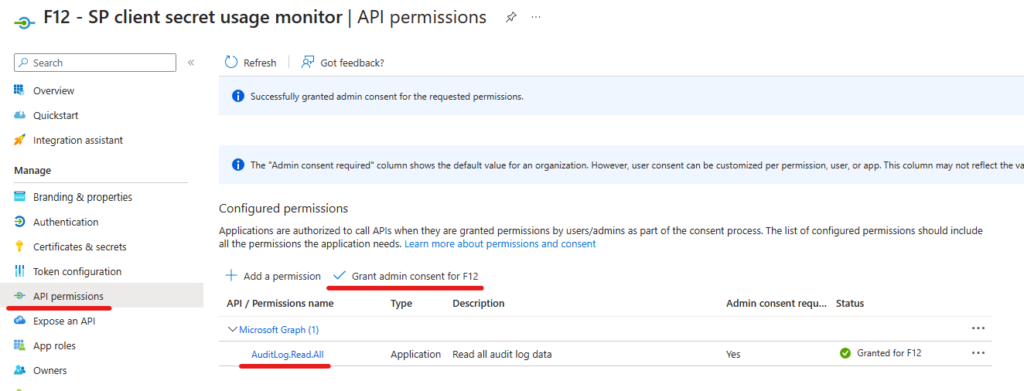

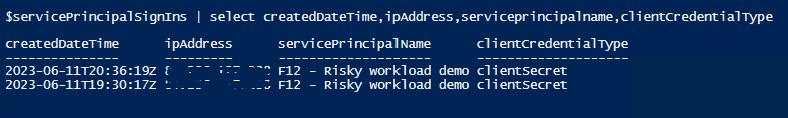

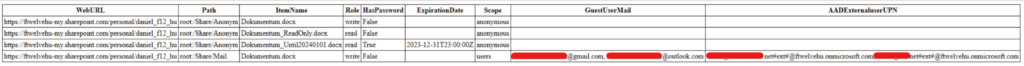

Entra ID sign-in logs:

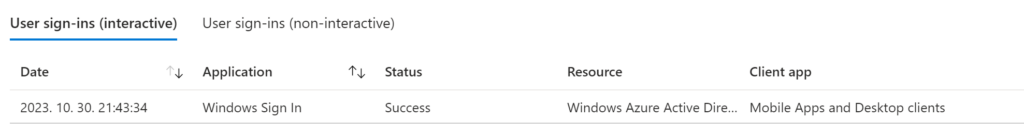

When you are signing-in the traditional way you see interactive sign-in events for the user Application = Windows Sign In and Resource = Windows Azure Active Directory

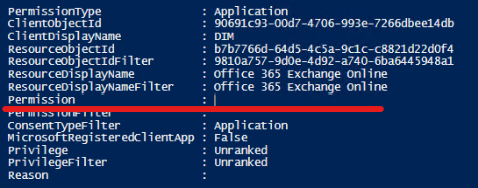

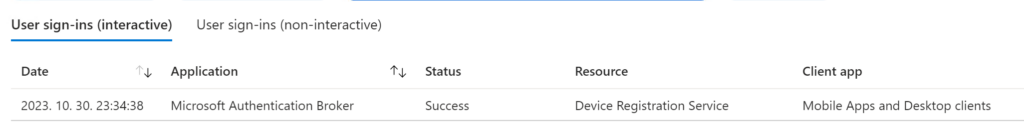

When using Web sign-in, the interactive part is Application = Microsoft Authentication Broker and Resource = Device Registration Service

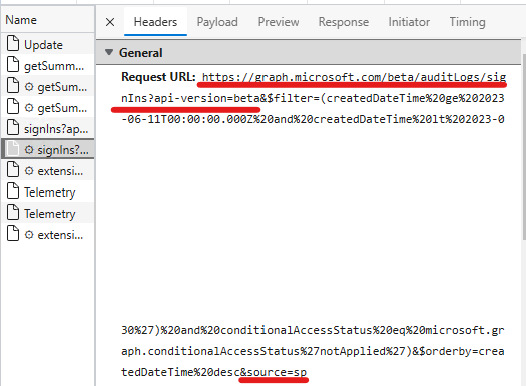

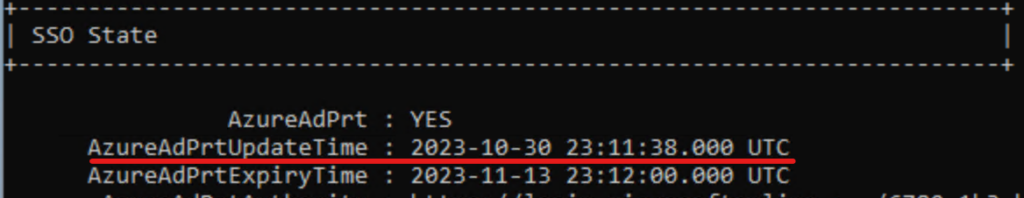

But what is interesting here is that straight after the interactive login, there is two additional non-interactive sign-ins, one is using the Windows-AzureAD-Authentication-Provider/1.0 UserAgent:

This timestamp is exactly the same as the AzureAdPrtUpdateTime from dsregcmd /status:

which is followed by a non-interactive Windows Sign In later:

Too much time spent for just a few valuable information… But “it’s not about the destination, it’s about the journey”.